JavaScript’s story begins in May 1995 at Netscape Communications. In a frantic rush to add interactivity to the web, Netscape hired Brendan Eich and gave him an almost impossible deadline: design and implement a new scripting language for the browser in mere 10 days. Eich prototyped what was then code-named Mocha (later LiveScript and finally JavaScript) in “ten contiguous days” in May 1995 to make it into the beta of Netscape Navigator 2.0. This breakneck timeline left little room for careful language design or thorough testing. JavaScript was very much a product of its hectic birth.

Why the rush? At the time, Java was the hot new language, and Netscape had struck a partnership with Sun Microsystems. Netscape wanted a lightweight “glue language” to complement Java: something accessible to web designers and non-programmers, in contrast to Java’s heavy, Java applet approach. Marketing even dictated that this new language should look vaguely like Java (to ride on Java’s hype), hence the confusing name “JavaScript”. In reality the two languages had very little in common. Eich later quipped that he was “under marketing orders to make it look like Java but not make it too big for its britches… it needed to be a silly little brother language”. In other words, JavaScript was intentionally designed to be a simpler, more forgiving scripting tool, “a silly little brother” to Java, suitable for animating image rollovers or validating forms, not for building large applications. The name itself was a marketing gimmick Eich has openly resented, calling it “a big fat marketing scam”.

This whirlwind genesis meant JavaScript inherited some birthmarks. It was created so quickly that certain design decisions (or mistakes) became baked into the language. For example, JavaScript’s type system was made extremely loose (everything is essentially a variant type under the hood), and it had to intermingle with browser objects in unorthodox ways. The primary goal was to ship something that worked fast. As a result, the earliest version of JavaScript (shipped in Netscape 2.0) was rough and quirky.Even Eich didn’t consider it a real programming language at first, more a handy browser glue. But users and developers immediately started relying on its behavior. By the time flaws were discovered, it was often too late to change them without breaking websites. The Web never forgets: once code is out in the wild, people build on it, and bug-for-bug compatibility becomes a feature. Many of the language’s later oddities and warts trace directly back to choices made (or rushed) in those first ten days.

ECMAScript and the Browser Wars

JavaScript’s popularity exploded alongside the early Web, but soon multiple implementations emerged. Microsoft reverse-engineered JavaScript for Internet Explorer and called their version JScript. Without intervention, there was a risk that JavaScript in Netscape and JScript in IE would diverge into incompatible dialects, fracturing the web. To prevent this, in 1996 Netscape submitted JavaScript to an international standards body, ECMA International, for standardization. The result was ECMAScript (with ECMAScript 1 standardized in 1997), essentially a language spec to ensure different browsers implement the same core JavaScript language. In theory, ECMAScript standardization was a helpful contribution, it created a formal language specification so that JavaScript (as implemented by Netscape, Microsoft, etc.) would behave consistently, making web pages interoperable across browsers.

In practice, however, the late 90s and early 2000s still saw plenty of fragmentation. The Browser Wars were raging: Netscape vs. Internet Explorer. Each browser introduced proprietary quirks. For example, IE introduced non-standard objects like document.all, and both browsers had slightly different DOM APIs. Core language features were mostly aligned with ECMAScript, but uneven adoption and browser-specific bugs meant developers often had to write defensive code if Netscape do this, if IE do that. The standards process did gradually bring convergence, for instance, ECMAScript 3 (ES3) in 1999 was widely implemented, and it reined in some differences. But progress on the language itself then stalled for nearly a decade due to industry disagreements.

Notably, ECMAScript 4 (ES4) in the mid 2000s was an ambitious overhaul that proposed major new features (like classes, modules, optional static types, etc.) to modernize JavaScript. However, ES4 became a cautionary tale in standards politics. It introduced so many changes (some not backward-compatible) that it ran into fierce controversy and vendor resistance. Different camps had different visions for JavaScript’s future, some wanted a more robust, Java-like language, others worried this would make the web’s scripting too complex and break old scripts. By 2008 the parties reached a truce: ES4 was abandoned without being released (the missing version of JavaScript). Instead, a more conservative update, ECMAScript 3.1, was renamed to ECMAScript 5 and finalized in 2009. ES5 added useful but modest improvements (like JSON, Array.prototype.forEach, and strict mode) without the radical changes of ES4. The abandonment of ES4 showed how backward compatibility and consensus triumphed over drastic redesign, a pattern repeated throughout JavaScript’s evolution. The language could grow, but carefully, so as not to break the Web.

By the late 2000s, a renewed interest in JavaScript was fueled by AJAX and the rise of rich web applications. All major browsers started competing on JavaScript performance (leading to JIT-compiling engines like Chrome’s V8 in 2008) and on rapid adoption of new ECMAScript features. This competition, ironically, brought more alignment with standards, each browser wanted to boast full support of the latest spec. From ES5 (2009) to ES6 (2015) and beyond, the ECMAScript standards process delivered annual updates with new features (ES6 brought big ones like classes, modules, arrow functions, let/const, etc.). The standards body (TC39) became a model of open evolution, where proposals go through stages and the community can give feedback. One could argue the standards process has been mostly helpful, it provided a common roadmap and prevented any single player from forking JavaScript’s core syntax or semantics too far.

However, the story of standards isn’t all sunshine. Fragmentation still occurred in other ways. During the years IE had a near-monopoly (~2000–2006), Microsoft slowed its implementation of new JavaScript features (IE stuck at ES3 for a long time). This effectively held the language back, developers couldn’t rely on new features until IE supported them, which took years. In response, libraries like jQuery emerged (around 2006) to smooth over browser differences and provide a unified way to script the web. It wasn’t the language spec that fragmented, but the ecosystem with myriad workarounds and patterns to cope with inconsistent environments.

Another form of fragmentation came from outside the browser: developers dissatisfied with JavaScript’s quirks created transpiled languages eg. TypeScript, Dart, CoffeeScript that introduce their own syntax or typing, then compile down to JavaScript. This compiles-to-JS movement implicitly critiques JavaScript’s design (why invent another language otherwise?) even as it leverages JavaScript’s ubiquity as a runtime. While these alternatives aren’t part of ECMAScript, they reflect the continued tension between JavaScript’s legacy design and developers’ modern needs. The standards committee has even adopted some ideas (TypeScript influenced many proposals, for example).

Standardization via ECMAScript was important for keeping JavaScript unified during the browser wars, and it enabled steady improvements. Yet the need to maintain backward compatibility and get buy-in from multiple stakeholders often meant oddities were preserved and ambitious fixes were sometimes shelved. The result is that JavaScript today is a mix of old design decisions and new additions still carrying along all its historical baggage.

The V8/Chrome Era

In the early days, there were multiple independent JavaScript engines: Netscape’s SpiderMonkey, Microsoft’s Chakra (in classic IE), Mozilla’s later JägerMonkey/SpiderMonkey in Firefox, Apple’s JavaScriptCore in Safari, Opera’s Carakan, and Google’s V8 in Chrome. This engine diversity meant no single company fully controlled JavaScript’s fate. But fast forward to the mid-2020s, and the landscape has consolidated dramatically. Google’s Chrome (and Chromium, its open-source base) now dominates browser market share – around 65–70% of users if you include Chrome plus Chromium-based Edge, Brave, Opera, etc. With Microsoft discontinuing its own engine in favor of Chromium’s in 2020, the Blink/V8 engine now powers the vast majority of browsers, leaving only two major alternatives: Mozilla’s Gecko (for Firefox) and Apple’s WebKit (for Safari).

This near-monoculture has raised concerns about a de facto Google monopoly over web technology. When one engine (V8) becomes so dominant, there’s a risk that web developers stop testing their sites in other engines, or use non-standard Chrome-specific features – leading to a “Chrome only” web. Even standards can become a bit skewed if one player implements new APIs faster and others struggle to catch up. For example, Google often pushes experimental features via Chrome’s origin trials. If these experiments become popular, they can pressure standards bodies to adopt them or leave other browsers feeling lagging. There’s an ongoing debate about browser engine diversity: some argue that having at least three different engines (Blink/V8, Gecko, WebKit) is crucial to avoid repeating the bad old days of Internet Explorer’s monopoly. Others note that maintaining a modern JS engine and rendering engine is incredibly resource-intensive, and the web platform’s complexity naturally led to consolidation (even Microsoft gave up and joined forces with Chromium).

From a performance standpoint, the dominance of V8 has upsides: Google’s investment in V8’s JIT compiler and optimization techniques benefited everyone using Chrome or Node.js. The V8 engine (and its peers) have made JavaScript orders of magnitude faster than it was in the 90s, enabling JS to be used in ways Eich never imagined (server-side in Node, desktop apps via Electron, etc.). But the downside is an implicit monopoly, Google’s priorities could dictate the performance characteristics and extensions of JavaScript. For instance, if V8 optimizes certain patterns, developers may unknowingly write code that performs poorly on other engines. If Chrome implements a new JS feature or API first, web apps may start depending on it before Firefox or Safari catch up, effectively letting Chrome set the pace of web innovation.

Another aspect of fragmentation is how JavaScript forks into different runtime contexts: not just browsers, but Node.js (server-side) and more recently Deno and others. Node.js uses Google’s V8 as well, but Node introduced its own module system (CommonJS) before the JS standard had modules, leading to a period of fragmentation in how modules are defined. The convergence is happening now that ES Modules are standard and supported in Node and browsers, but it took time. It’s a reminder that even outside the browser, decisions in one environment can create divergence that standards then have to reconcile.

Today’s JavaScript world is paradoxically both monocultural and fragmented. It’s monocultural in that one engine (Chrome’s V8, inside Blink) has an outsized influence – a situation some have likened to the new IE6 problem but with Chrome. Yet it’s fragmented in that there are still multiple runtimes and slight differences in implementation, and certainly still differences in API support (especially outside the core language, in Web APIs – e.g., Safari may not support a cutting-edge API that Chrome does, etc.). The hope is that strong standards processes and competition (what little remains) will keep Chrome/V8 in check. The fear is that if Chrome’s dominance grows unchecked, we might lose the last independent engines and end up with a single corporate-controlled web platform. This means keeping an eye on portability: testing on non-Chrome browsers and avoiding reliance on engine-specific quirks is more important than ever, ironically echoing the advice from the 2000s to not code just for IE.

Design Flaws

JavaScript is infamous for its illogical or surprising behaviors. Many of these oddities stem from JavaScript’s rushed design and the need for backward compatibility (what was done in 1995 can’t be undone later, only papered over). Let’s explore some of the most notorious examples and why they happen. Brace yourself, this is where JavaScript’s personality really shines, often with a dose of sarcasm.

Order Matters: [] + {} vs { } + []

If you take an empty array and add an empty object, what do you get? In JavaScript, the answer depends on the order of the operands!

console.log([] + {}); // => "[object Object]"

console.log({} + []); // => 0

Yes, you read that right! swapping the operands changes the result from a string to a number. What on earth is going on?

The expression [] + {} converts both operands to primitives: an empty array becomes "" (an empty string), and an empty object becomes "[object Object]" (via its default toString value). So "" + "[object Object]" results in the string "[object Object]". It’s basically doing string concatenation. But {} + [] is parsed differently: when {} appears first in statement context, JavaScript thinks it’s a standalone block (an empty block) rather than an object literal. So {} is treated as nothing, then we have +[]. The unary + operator attempts to convert the array to a number. An empty array, when coerced to number, becomes 0. Thus { } + [] ends up as 0.

null == undefined but null === undefined is false

In JavaScript, == is the loose equality operator, which performs type coercion, whereas === is the strict equality operator, which doesn’t. The result? null == undefined returns true, but null === undefined returns false. Why?

The loose equality rules explicitly say that null and undefined are considered equal to each other and not equal to anything else. It’s a special case baked into the language: null and undefined both represent “no value” in different ways, so the designers figured it would be handy if x == null caught both null and undefined cases. However, under strict equality, no type conversion is done and since null is of type “null” and undefined is of type undefined, they are of different types and thus not strictly equal.

In practice, this means using == can lead to unexpected truthy comparisons, while === is almost always the recommended choice. It’s JavaScript’s way of being helpfully confusing: by trying to guess that you meant either null or undefined with ==, the language creates a pitfall. Many style guides simply ban == and != to avoid these gotchas. But legacy code still abounds that relies on null == undefined being true. This odd duality exists entirely due to legacy reasons, early JS had only ==, and by the time === was introduced, it had to keep ==’s weird rules for compatibility. It will even consider the number 0 equal to the string "0", among other coercions. The take-home: always use === unless you have a very good reason not to.

typeof NaN === 'number'

Here’s a fun one. NaN stands for Not a Number. One might reasonably expect that if you ask JavaScript the type of NaN, it would say not a number or maybe NaN. Nope:

console.log(typeof NaN); // "number"

Yes, JavaScript insists that this non-number is actually of type Number. It sounds like a joke, but there is a rationale. In JavaScript, all numeric values are IEEE-754 floating point under the hood, and NaN is actually a special numeric value in that 64-bit float format (a specific bit pattern indicating an invalid number). So the typeof operator, which has a limited set of possible return strings, returns “number” for any numeric value, NaN included.

From the language’s internal perspective, NaN is a kind of number (the result of undefined or invalid numeric operations). Of course, to humans, “Not-a-Number” being labeled a number is deeply confusing, it’s an irony that has generated a lot of snark. (One might say JavaScript is so inclusive, even values that are not numbers identify as numbers! 😜)

The proper way to check for NaN is not by type, but by a specific function: Number.isNaN(x) will tell you if a value is the NaN value. Also, as a bonus quirk: NaN is the only value in JavaScript that is not equal to itself i.e. NaN === NaN is false. That means you can’t even do a normal equality check to see if something is NaN, because NaN never equals anything (by design in IEEE-754). This is why Number.isNaN (or the global isNaN with caveats) exists. In short, typeof NaN === 'number' is technically correct (NaN is a numeric type value) but practically a gotcha.

It’s another artifact of the language’s inner workings leaking through a simple API.

[] == ![] is true (and yes, [] is truthy)

Now for a real brain-bender. JavaScript has both truthy/falsy values and loose equality, and in this example they collide spectacularly. An empty array [] is a value that, in a boolean context, is considered truthy (because it’s a non-null object). So ![] (logical NOT of an array) is false, since the array is truthy, negating it gives false. Now, what is [] == ![]? This becomes [] == false. When you compare an object (array) to a boolean with ==, JavaScript will convert the boolean to a number (that’s one of the weird == rules) false becomes 0. Then it will convert the other side (the array) to a primitive to compare. An empty array, when forced to primitive in a numeric context, will convert to "" (empty string) then to 0. So we get 0 == 0, which is true. Thus, [] == ![] is true! Meanwhile, [] == [] is false, because two distinct object instances are never equal under == or === (they’re reference types).

An empty array is truthy (it behaves like true if you put it in an if), yet [] == false is true, meaning in a loose comparison it behaves like false. In JavaScript, apparently, an empty array can be both truthy and loosely falsey depending on context, a nice little contradiction. This particular wart is a poster child for JavaScript’s coercion rules gone wild. The logic is documented (the spec steps for Abstract Equality say if one side is boolean, convert it to number, etc.), but it’s utterly non-intuitive.

Legacy is again to blame, these coercion rules were in the first spec to allow, for example, if (array) ... to run (truthy objects) yet also allow numeric comparison with booleans. It seemed like a good idea in the 90s to have flexible typing; in hindsight, it causes endless confusion. The safest practice: avoid == with mixed types entirely (as mentioned) and avoid writing code that relies on truthiness of arrays or objects. Use explicit length checks for arrays, for instance. That way you’ll never have to explain to a coworker why [] == ![] is true.

typeof null === ‘object’

This might be the granddaddy of JavaScript warts, and unlike some others, it’s actually an acknowledged bug in the language that has never been fixed for backward compatibility reasons. If you run typeof null, you get “object”.

But null is not an object, it’s supposed to signify “no object” or “no value”. So why would JS say it’s an object? The reason goes deep into history: in the original implementation of JavaScript, values were tagged with a type identifier under the hood. The tag for “object” was zero. And null was represented as the NULL pointer (zero), so it kind of accidentally got the object tag. It was essentially a bug in the first version. By the time this was discovered, scripts everywhere were already doing typeof x === 'object' to check for objects (often expecting null to be included in that). Fixing it to return “null” for null would have been “the right thing” logically, but it would have broken a lot of existing code that (for better or worse) relied on typeof null being “object”.

EcmaScript actually considered correcting this, a proposal long ago suggested making typeof null === ’null’ in some strict mode – but it was rejected because it would cause too much breakage. So to this day, typeof null is a special case gotcha. The proper way to check for null is just compare directly to null, or use x === null. Many frameworks internally do something like obj === null || typeof obj !== "object" to distinguish null from real objects.

The average JS beginner, however, trips on this at least once, thinking Wait, null is an object?. The answer: No, it isn’t – it just returns that string due to a legacy bug. As one source dryly notes: it’s a historical mistake… now too late to change without breaking old code.

We messed up in the 90s, and now we all live with it. This exemplifies how backward compatibility keeps JavaScript’s design flaws alive indefinitely, you can’t fix typeof null or half the web might break.

We could go on (JavaScript has a bottomless pit of these curiosities), but the above are some of the greatest hits. There are entire lists and repositories eg. WTFJS collecting examples where JavaScript behaves in ways that defy common sense. To be fair, each of these behaviors is defined in the specification, and there are reasons, often historical, why they do what they do. But that doesn’t make them any less frustrating the first time you encounter them.

The silver lining is that over time, TC39 (the committee that evolves JavaScript) has tried to mitigate these issues.

For example, ES6 introduced Object.is() which can be used to reliably check NaN (since Object.is(NaN, NaN) is true, unlike ===) and to handle -0, etc. They also introduced strict mode and newer syntax that avoids some pitfalls. But the old stuff can’t be removed – the need for legacy web compatibility is paramount. “Don’t break the Web” is basically JavaScript’s prime directive, for better or worse. This is why bizarre things like with (a problematic feature) or == coercion rules or typeof null remain with us – millions of webpages depend on them behaving exactly as originally implemented.

We can certainly laugh at these oddities (and using humor is one way to stay sane when debugging JavaScript!). But they also underscore an important point: JavaScript’s design flaws largely stem from its rushed creation and the subsequent mandate of backward compatibility. The language we have today is the way it is because Eich’s 10-day prototype couldn’t anticipate 25+ years of usage, and once the train left the station, it couldn’t reverse without derailing the web.

Obesity Epidemic

JavaScript’s ubiquity has had an unintended side effect: we tend to throw JavaScript at every problem, often unnecessarily. Entire websites are built as single-page JavaScript applications for what could be simple static content. We’ve reached a point where the average website is bloated with megabytes of script, frameworks, analytics, fancy effects, even when delivering mostly text or images. This has real costs, both performance-wise and environmentally.

Consider this sobering comparison: one analysis found that a short local news article about hospital curry and crumble shipped so much JavaScript that the JavaScript alone… is longer than Remembrance of Things Past, Marcel Proust’s epic 3MB novel. In fact, tech commentator Maciej Cegłowski pointed out the irony that even articles lamenting web page bloat are themselves bloated: a 2012 GigaOm piece warning that average pages topped 1 MB was itself 1.8 MB; a 2014 followup warning of 2 MB pages was 3 MB in size. We have been unknowingly complicit in a sort of website obesity epidemic. The result is sluggish websites that tax devices and networks.

Enviromental impact

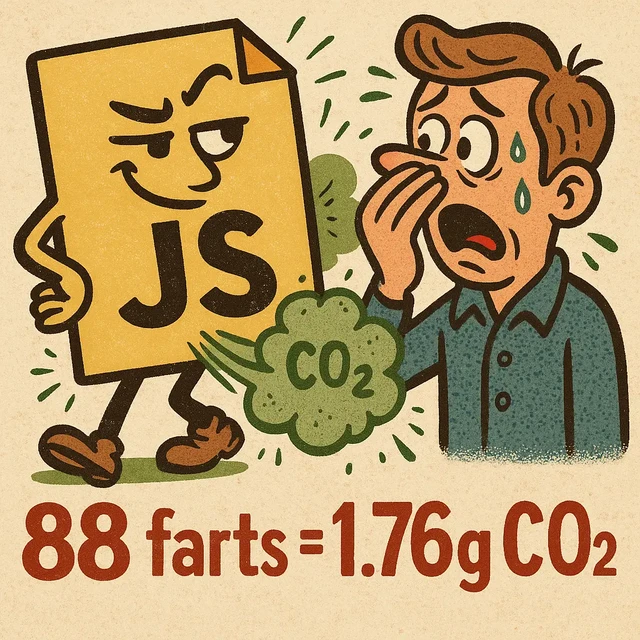

1.76g is roughly equivalent to the CO₂ in 88 “human farts”, and some bloated sites emit “961 farts” worth per view.

Performance isn’t just a nicety; it has cascading effects. Billions of users accessing heavy sites suffer longer load times and higher battery drain on mobile devices. But even beyond user experience, there’s a growing awareness of the energy consumption of web browsing. Every byte transferred and every script executed consumes electricity, in data centers, network routers, and user devices. The world’s data centers and network infrastructure consume on the order of 400 terawatt-hours of electricity per year, and a chunk of that is for serving websites and running all this code. It may seem abstract, but it adds up. On average, a single page view on a typical website produces about 1.76 grams of CO₂. Heavier sites can produce an order of magnitude more. As a analogy, 1.76g is roughly equivalent to the CO₂ in 88 “human farts”, and some bloated sites emit “961 farts” worth per view.

In less flatulent terms, some sites can emit ~20g of CO₂ for one page load. Multiply that by millions or billions of visits, plus the effect of bots (search engine crawlers that now often run JS to index content), and the carbon footprint of unnecessary JavaScript is quite real. One developer estimated that shaving off just 1 KB of JavaScript on 2 million websites could reduce CO₂ emissions by about 2950 kg per month. That’s over 3 tons of CO₂ in a year for a mere kilobyte across sites, now imagine removing 100KB or 1MB of wasteful script.

Economics of Performance

Beyond environmental impact, there’s the raw economics of performance. Mobile users in many parts of the world pay for data by the megabyte; a bloated web page can literally cost them money. And for users on slower networks or older devices, heavy JS can make sites practically unusable. We’ve all encountered pages that lock up the browser for seconds because some giant JS bundle is initializing. It’s not just annoying, it’s exclusionary. A JavaScript-everywhere approach tends to assume powerful hardware and fast internet, which many users don’t have.

The solution? It might be time to rediscover static HTML and lean websites, an HTML-first approach, using JavaScript more sparingly and deliberately. The web was originally static: servers delivered HTML documents for each page. We moved to dynamic, server-generated pages (PHP, etc.), and then to rich client-side apps that assemble everything in the browser with JavaScript. But we’ve now swung so far that even content sites load a React app just to show an article, or require megabytes of JS to render what could have been sent as pre-built HTML. That’s a lot of unnecessary computation happening on each user’s device. By embracing a more static approach, e.g. prerendering pages, using server-side rendering, or generating sites with modern static site generators – we cut down on client-side work. As one source notes, static sites don’t need to query databases or do heavy lifting on each request; they “require far less server load and thus use less energy. They also often send less script to the client, because much of the content is already in the HTML. In cases where content doesn’t change frequently or doesn’t need to be highly interactive, static or cached pages are a huge win. They make the site faster, cheaper, and greener.

This isn’t to say no JavaScript at all. JS is vital for interactivity and modern user experiences. But we should be judicious. A good guiding principle is progressive enhancement: send a functional baseline page (HTML/CSS) and use JavaScript to enhance it when necessary. For example, a navigation menu might work as plain links, with JS adding a nicer dropdown behavior, rather than building the entire navigation via JS after load. Similarly, if you have a blog or documentation site, do you really need a complex SPA, or could it be a set of static files with a bit of JS for small enhancements? The latter will likely be more robust (works with JS disabled), faster to first render, and far lighter on both network and CPU.

We should also consider the cumulative impact of frameworks. It’s common for sites to include several third-party scripts (analytics, ads, social media widgets). Each one might seem “small,” but they add up and often pull in even more resources. A mindset of performance budgeting can help, setting a cap like “our page should be < 500KB and < 100 JS KB” and sticking to it. Many top tech companies do this for their landing pages, knowing every additional script can reduce conversion due to slower speeds.

There’s a sustainability angle too: in an era where we are concerned about climate change, making web development choices that reduce energy usage is part of being a responsible technologist. It’s frankly low-hanging fruit – unlike refactoring a data center for efficiency, simply not shipping a giant JavaScript framework for a mostly-static site is an easy win. As the Gatsby team (promoters of a React-based static site generator) pointed out, dynamic sites use more CPU cycles than static, meaning more electricity and carbon emissions, whereas static sites, by serving pre-built pages, require far less server work, … less CO₂. And if you do need dynamic behavior, you can often achieve it with much smaller sprinkles of JavaScript or newer approaches like Web Components that don’t require wholesale rehydration of the entire DOM.

Let’s not forget the user experience for the bots and crawlers, an often invisible audience. Search engines like Google now run JavaScript in headless Chrome to index content, but it’s resource-intensive for them too. If your site can serve static HTML to crawlers (or be static overall), you’re reducing the load on those systems. And for the burgeoning world of bots (from social media previews to archivers), less script means they can retrieve your content faster and more reliably. There’s a reason the old wisdom of the best request is the one you don’t make and the fastest code is the code that doesn’t run still holds true.

JavaScript is a double-edged sword: an incredibly powerful, flexible language that has enabled the interactive web as we know it, but also a source of complexity, inefficiency, and the occasional head-scratching moment. We’ve traced how its history – from a ten-day hack to a standardized lingua franca – left a legacy of design oddities that we’re still dealing with decades later. We’ve looked at how standards and engines evolved, sometimes in harmony, sometimes in competition, leading to today’s scenario of a Chrome-dominated ecosystem. We’ve laughed (and cried) at some bizarre examples of JavaScript logic (or illogic), all in good humor, but each underscoring a real point about why thoughtful language design and the ability to fix mistakes matter. And importantly, we’ve considered the bigger picture: the cost of our JavaScript-heavy approach to web development, from slow websites to wasted energy on a global scale.

The path forward, especially for tech leaders and developers, involves a bit of critical reflection. It might be time to rein in our use of JavaScript, not to abandon it, but to use it more wisely. The modern web stack is starting to embrace this idea: there’s a resurgence of interest in MPAs (multi-page apps) and server-side rendering, new frameworks that are HTML-first (like Qwik, Astro, 11ty, etc.), and tools that only ship minimal JS to the client. Even the big single-page app frameworks are adopting optimizations like code-splitting, partial hydration, and resumability to mitigate their footprint. These are encouraging trends.

As we build the next generation of web experiences, the key is balance. Use JavaScript for what it’s great at, complex application logic, rich interactivity, realtime updates, but don’t use it just because. Plain HTML/CSS can often do the job with zero runtime cost. And when we do use JS, we should remember the lessons of its history: be cautious of its quirks (they will be with us forever), and be mindful of performance (every byte and CPU cycle matters when multiplied by millions of users). In a sense, the future of web development might be a bit of a throwback: embracing simplicity and robustness, much like the early days, but now on a far grander scale.

JavaScript isn’t going anywhere, but maybe, just maybe, we can learn to build more with less JavaScript. The web (and the planet) will thank us for it.