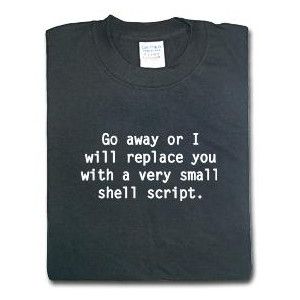

Back when I was younger, there was a T-shirt I desperately wanted. Black, minimalist, with a single line of text:

Go away or I will replace you with a very small shell script.

It was funny, but not because it was edgy. It was funny because it was true. Anyone who spent time in Telco, ops, or large enterprises knows exactly the type: entire roles that existed mostly as human glue around processes that could have been automated years ago. The shirt wasn’t a threat, it was an observation.

Fast-forward to today, and that joke aged disturbingly well.

Only now, the shell script has friends.

We call it AI, but let’s be precise: what most people interact with today is not intelligence in the human sense. It doesn’t reason, it doesn’t understand, and it doesn’t “think.”

It predicts.

Large Language Models are best described as GAC — Glorified Auto-Complete. If Google Search Suggest was the ape, LLMs are the upright-walking hominid.

Same evolutionary line. Vastly different impact.

And this matters, because a lot of fear and confusion comes from misunderstanding what’s actually being automated.

The Real Question: Cognitive Effort Density

Every technological disruption eventually boils down to the same uncomfortable question:

How much cognitive effort does your job actually require?

Not how important it sounds. Not how many meetings it involves. Not how many dashboards you maintain.

But how much of your daily output is:

- Pattern recognition

- Template application

- Rule following

- Rephrasing known information

- Producing predictable responses

Because those things? They are exactly what GAC is very good at.

Historically, we thought automation would eliminate “simple” jobs first. Assembly lines, data entry, repetitive labor.

Reality disagrees.

LLMs don’t have hands. They have text.

So the first wave isn’t factory workers, it’s:

- Middle management communication

- Customer support scripts

- Technical documentation

- Junior programming tasks

- Analysts who summarize instead of analyze

- Roles that exist mainly to translate between systems and people

If your job can be described as “taking input, applying known rules, and producing output,” congratulations, you’re standing on very thin ice.

And no, wearing a white collar doesn’t help.

Why “They Took Our Jobs” Keeps Repeating

Every era has its version:

- The Luddites vs weaving machines

- Factory workers vs assembly lines

- Typists vs word processors

- Travel agents vs the internet

Each time, society panics. Each time, productivity explodes. Each time, new roles appear.

But here’s the twist: the transition cost keeps rising.

When a loom replaces a weaver, retraining is physical. When software replaces a clerk, retraining is technical. When GAC replaces cognitive labor, retraining becomes… existential.

The Illusion of Intelligence

GAC doesn’t know anything. It doesn’t understand truth. It doesn’t reason. It doesn’t care if it’s wrong.

And yet, for an alarming number of jobs, that’s enough.

Why?

Because many roles don’t actually require deep understanding. They require acceptable output. LLMs are exceptional at being convincing enough.

That’s the uncomfortable part.

The Jobs That Will Survive (and Thrive)

If GAC is good at pattern completion, then human value shifts to what it cannot do:

- Judgment under uncertainty

- Defining problems, not just solving them

- Accountability

- Cross-domain reasoning

- Understanding incentives, power, and human behavior

- Deciding what should not be done

In short: thinking, not typing.

Ironically, AI doesn’t kill thinking jobs it kills jobs that pretend to be thinking jobs.

Maybe it’s time for an updated version of that old shirt:

Go away or I will replace you with a prompt.

Funny. Uncomfortable. Accurate.

Because the future won’t be humans vs AI.

It will be humans who understand what GAC is — versus humans who don’t.

And history is not kind to the latter.